This is an automated archive made by the Lemmit Bot.

The original was posted on /r/machinelearning by /u/thrownicecatch on 2024-10-16 01:52:11+00:00.

Hi, long time lurker and first time poster. Basically the title.

Over the last few years of MICCAI (International Conference on Medical Image Computing and Computer-Assisted Intervention; the premier conference for medical image analysis research) I have noticed a worrying trend in health equity and fairness research: authors claiming unexpected attributes of datasets as “sensitive attributes” in their analysis and modeling:

- [MICCAI 2022] FairPrune: Achieving Fairness Through Pruning for Dermatological Disease Diagnosis (click on the SharedIt link under Link to paper for the paper’s PDF): Early Accept (early accept is a paper that received all accept decisions and did not have a rebuttal phase).

- The authors use dermatoscopic (aka dermoscopic) images from the ISIC 2019 dataset and use the gender label as the “sensitive attribute” with the female group as the “privileged group”, simply because “The vanilla trained model has a higher accuracy on the female images.”

- [MICCAI 2023] Toward Fairness Through Fair Multi-Exit Framework for Dermatological Disease Diagnosis

- This paper shares one author with the previous paper, and they make the same assumption: “we take gender as our sensitive attribute” and “The female is the privileged group with higher accuracy by vanilla training.”

- [MICCAI 2024] BiasPruner: Debiased Continual Learning for Medical Image Classification: Best Paper Award Nominee.

- Patient age is used as the sensitive attribute (age≥60, age<60) in dermatoscopic images from the HAM10000 dataset. Different set of authors.

Why are gender and age strange choices?

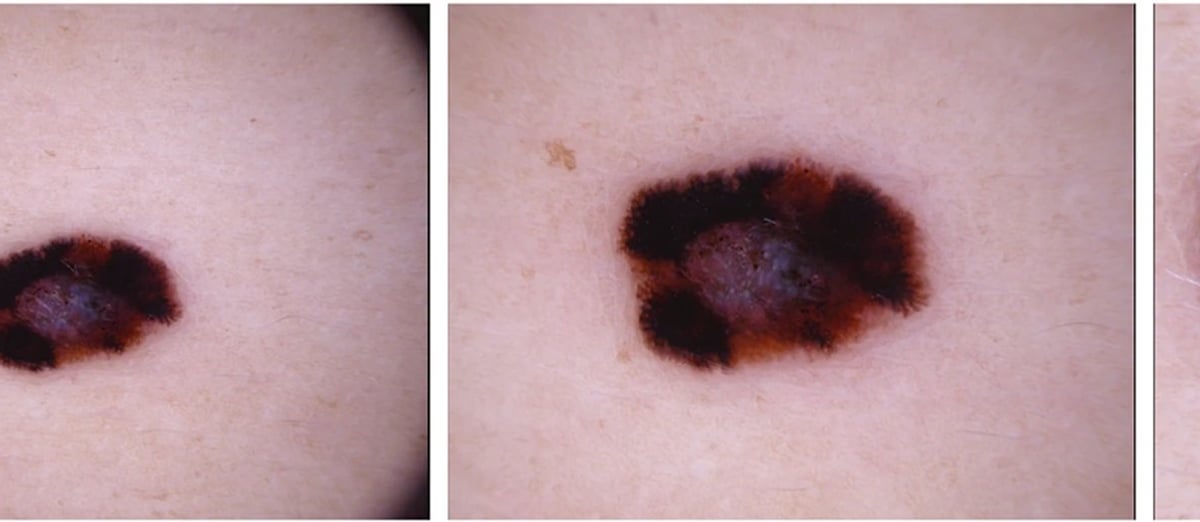

Dermatoscopic images are acquired using a dermatoscope and have extremely low FOV. For sample images, see this imgur link: . As you can see, it should be impossible for these low FOV images to contain any age or gender specific information that may be the source of bias. It would be understandable if age and gender were considered bias sources for CXRs (chest x-rays), or skin tone for skin images, but age and gender are not even reflected in these dermatoscopic images. How can they be sources of bias? And why an age threshold of 60 years?

Are these simply instances of HARKing, since there is no literature (to the best of my knowledge) that says age and gender are reflected in dermatoscopic images? I also fear that if a similar analysis was carried out using any other unrelated metadata as the sensitive attribute (e.g., skin tone in CXRs), it is possible that performance may improve, but that doesn’t mean that CXRs have skin-tone related bias.

Please help me understand how these sensitive attributes are chosen. Thank you in advance.